Forget the Cloud - 'The Fog' Is the Next Big Thing

The cloud is at the center of nearly every tech giant’s strategy, from Microsoft (NASDAQ:MSFT) and Intel (NASDAQ:INTC) to Amazon (NASDAQ:AMZN) and Google. And why shouldn’t it be? Everything’s moving to the cloud, where enormous data centers store millions of terabytes of information and run countless apps.

More important, that’s where billions of smart Internet of Things (IoT) nodes that everyone keeps talking about will connect, so enterprise users can make brilliant decisions based on real-time big-data analytics.

Or not.

Turns out, the cloud is not all it’s been hyped to be. Enterprise CIOs are coming to realize that many of the services and apps and much of the data their users rely on for critical decision-making are better suited closer to the edge – on-premise or in smaller enterprise data centers.

Considering the exploding demand for mobility, business intelligence and real-time analytics, not to mention the enormous amount of data that countless smart IoT clients and applications are expected to generate, it makes complete sense to process and store some of it locally.

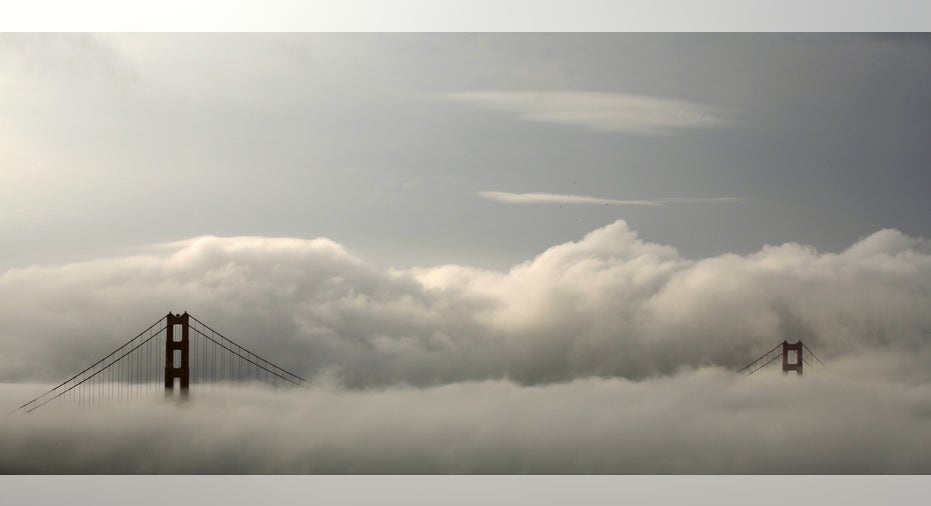

Say hello to the next big thing: fog computing.

A consortium of companies, including Cisco, Dell, Microsoft, Intel and ARM, as well as researchers at Princeton University, are betting that the future of enterprise computing will be a hybrid model where information, applications and services are split between the cloud and the fog. Actually, it was Cisco that coined the term “fog computing.”

If that sounds like a bunch of marketing hooey and silly weather metaphors, let’s take a step back and try to make sense of it all.

The cloud is a bunch of enormous centralized data centers owned by Apple, Google, Amazon, Facebook, and other tech companies. Instead of running applications and storing data on clients – notebooks, tablets and smartphones – we now do more and more of that in the cloud.

While it is somewhat costly in terms of network bandwidth that companies and consumers have to pay for, we’re mostly better off doing much of our computing and keeping our information secure on those big companies’ servers and storage systems. And we can access them on any device, anytime, anywhere.

So far, those data centers, backbone IP networks and cellular data networks have been able to handle the load, but sooner or later, that’s going to change. The reason is IoT.

When everything from cars and drones to video cameras and home appliances are transmitting enormous amounts of data from trillions of sensors, network traffic will grow exponentially. When that happens, real-time services that require split-second response times or location-awareness for accurate decision-making will need to be deployed closer to the edge to be useful.

Think about it. If you run a high-tech farm in California’s central valley and have sensor networks to monitor irrigation levels, soil moisture, and ambient temperature, you don’t necessarily need instantaneous monitoring or an on-premise server room. You’d probably be better off outsourcing that to the cloud.

On the other hand, if you’re the on-site manager of a large power plant with sensors controlling thousands of critical instruments, valves and turbines, you want to know what’s going on in real time. More important, machine-to-machine (M2M) interface should occur on your local network for optimum response times.

Likewise, if you’re driving down the freeway in your brand new Tesla and a sensor determines that tire pressure is low or the brakes are about to fail, you don’t want to waste precious milliseconds sending data to the cloud and back when the car’s own network is perfectly capable of communicating remedial measures.

Luckily, processing power, memory speed, and storage capacity on client devices are always increasing, as are bandwidths of cellular data, Wi-Fi, Bluetooth and other network architectures. That will enable distributed fog networks in enterprise data centers, around cities, in vehicles, in homes and neighborhoods, and even on your person via wearable devices and sensors.

By balancing user and IoT needs between the cloud and fog, network architects can achieve optimum adaptability and scalability. At least that’s the theory.

If you’ve been around long enough, this is all probably starting to sound strangely familiar. When I started out in engineering back in the day, computing was centralized. We accessed mainframes in corporate-wide computer rooms from dumb terminals. Then came the PC and distributed computing via local and wide area networks.

The Internet and broadband networks gave rise to the cloud, and computing began to revert back to a more centralized client-server model. But before that transition could even finish taking place, we’re looking to decentralize again, albeit with far more complex networks and sophisticated service models.

The more things change, the more they stay the same. Especially the media hype over the next big thing.