Amazon touts artificial intelligence products, services for businesses

Amazon sees 3 layers to its opportunity in AI space, is investing heavily in all of them: CEO Andy Jassy

Does it make sense to invest in Amazon?

Simpler Trading VP of Options Danielle Shay breaks down company earnings on Making Money.

Amazon updated investors on its latest initiatives focused on artificial intelligence (AI) in the company’s second-quarter earnings call on Thursday.

Tech giant Amazon and its cloud computing-focused subsidiary, Amazon Web Services (AWS), have invested heavily in AI. While consumer-facing AI chatbots and image generators have garnered a significant amount of attention, Amazon has focused its efforts on providing AI services and large language models to business clients.

"Generative AI has captured people’s imaginations, but most people are talking about the application layer, specifically what OpenAI has done with ChatGPT," said CEO Andy Jassy. "It’s important to remember that we’re in the very early days of the adoption and success of generative AI and that consumer applications is only one layer of the opportunity."

Jassy explained that Amazon sees three key opportunities in the AI space: making the chips needed to power AI programs and large language models (LLMs), providing a platform for businesses to make their own AI tools and LLMs using those made by Amazon and other firms, and creating tools like coding copilots that individuals can use.

WHAT IS ARTIFICIAL INTELLIGENCE (AI)?

Tech giant Amazon touted its AI initiatives during the company's second-quarter earnings call on Thursday. (REUTERS/Pascal Rossignol/File Photo / Reuters Photos)

"At the lowest layer, is the computer required to train foundational models and do inference or make predictions," Jassy explained. He went on to note Amazon’s partnership with Nvidia in developing high-end AI chips, known as graphics processing units (GPUs).

| Ticker | Security | Last | Change | Change % |

|---|---|---|---|---|

| AMZN | AMAZON.COM INC. | 210.32 | -12.37 | -5.55% |

While customers are excited about Amazon’s Nvidia chips, Jassy said that a scarcity of supply of those high-end chips prompted the company to begin developing its own AI chips for LLM training and inference, which are in their second versions and present a "very appealing price performance option for customers building and running large language models."

"We’re optimistic that a lot of large language model training and inference will be run on AWS’ Trainium and Inferentia chips in the future," Jassy said.

AMAZON ANNOUNCES NEW CUSTOMIZABLE AI MODELS FOR CLOUD CUSTOMERS

Amazon CEO Andy Jassy said the tech giant views its opportunity in the AI space in three areas – computing power through high-end chips, AI tools and large language models as a service, and consumer-facing tools. (David Ryder/Bloomberg via Getty Images / Getty Images)

Jassy explained that Amazon views the "middle layer" of AI opportunities as being "large language models as a service." He said this area is compelling to companies that don’t want to invest significant resources in terms of finances and time in building their own large language models. AWS is meeting this opportunity with Amazon Bedrock.

Bedrock is a service Amazon unveiled this spring that allows customers to customize models with their own data without leaking proprietary data into the model, in addition to offering the same security, privacy and platform features AWS has. Amazon’s own large language models, known as Titan, are available on Bedrock, as are third-party models from Anthropic, Stability AI, AI21 Labs, Cohere.

"Customers including Bridgewater Associates, Coda, Lonely Planet, Omnicom, 3M, Ryanair, Showpad and Travelers are using Amazon Bedrock to create generative AI applications," Jassy noted.

AMAZON PLANNING CONVERSATIONAL AI CHATBOT FOR WEBSITE SEARCH

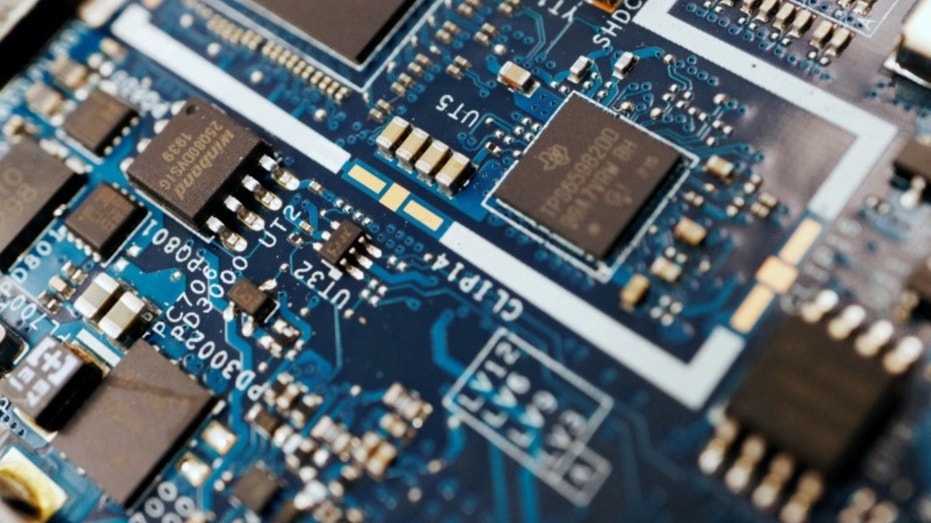

Amazon is making high-end semiconductors capable of powering AI models through a partnership with Nvidia, in addition to developing its own chips. (Reuters/Florence Lo/Illustration / Reuters Photos)

He added that Amazon announced new capabilities for Bedrock in July, including new models from Cohere, Anthropic and Stability AI, in addition to agents that let customers create conversational agents to deliver personalized, up-to-date answers based on proprietary data.

In terms of the apps that run on large language models, Jassy said that "one of the early compelling generative AI applications is a coding companion." He explained that this use case is why the company built Amazon CodeWhisperer, an AI-powered coding companion that recommends code snippets directly in the code editor that accelerates developer productivity.

Amazon's stock was up over 9.5% in after-hours trading following Thursday's earnings call. (Alejandro Martinez Velez/Europa Press via Getty Images / Getty Images)

Jassy said that all of Amazon’s teams are building generative AI applications to improve customers’ experiences. However, he noted, "While we will build a number of these applications ourselves, most will be built by other companies, and we’re optimistic that the largest number of these will be built on AWS."

"Remember, the core of AI is data. People want to bring generative AI models to data, not the other way around," Jassy said. "AWS not only has the broadest array of storage, database, analytics and data management services for customers, it also has more customers and data stored than anybody else."

GET FOX BUSINESS ON THE GO BY CLICKING HERE

Amazon’s stock price was up over 9.5% in after-hours trading following Thursday’s earnings call, having risen from $128.91 per share where it closed to over $141 a share.