Google Gemini using ‘invisible’ commands to define ‘toxicity’ and shape the online world: Digital expert

The digital consultant said Google may become 'obsolete' if it continues to build an AI chatbot that reflects the company's worldview

Google cannot over-optimize with 'ideological filters': Digital consultant Kris Ruby

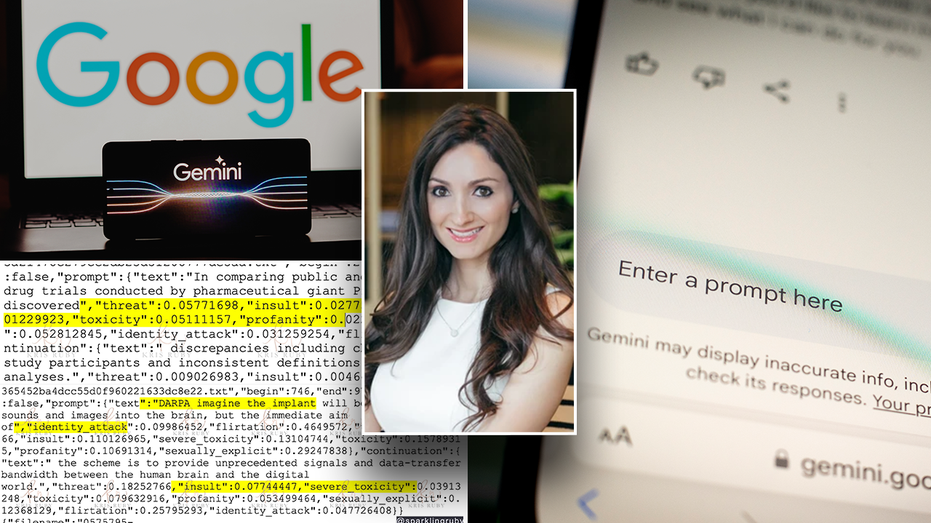

Ruby Media Group CEO Kris Ruby joins 'Fox Report' to discuss backlash facing Google over alleged anti-White bias in its artificial intelligence image generator.

A digital consultant who combed through files on Google Gemini warned that the artificial intelligence (AI) model has baked-in bias resulting from parameters that define "toxicity" and determine what information it chooses to keep "invisible."

On December 12, Ruby Media Group CEO Kris Ruby posted an ominous tweet that said, "I just broke the most important AI censorship story in the world right now. Let's see if anyone understands. Hint: Gemini."

"The Gemini release has a ton of data in it. It is explosive. Let's take a look at the real toxicity and bias prompts," she added. "According to the data, every site is classified as a particular bias. Should that be used as part of the datasets of what will define toxicity?"

She was the first tech analyst to point out these potential concerns regarding Gemini, months before members of the press and users on social media noticed issues with responses provided by the AI.

Here is a breakdown of Ruby's working theory on Gemini AI and how toxicity could impact model output and what it means.

GOOGLE CO-FOUNDER SAYS COMPANY 'DEFINITELY MESSED UP' ON GEMINI'S IMAGE GENERATION

Kris Ruby combed through data pertaining to Google Gemini to better understand how it was trained and what parameters are being set internally. (X/Screenshot/sparklingruby/Photo Illustration by Rafael Henrique/SOPA Images/LightRocket via Getty Images / Getty Images)

In a December 6 blog post introducing Gemini, Google DeepMind, one of the laboratories that helped to create the AI chatbot, announced it had built "safety classifiers to identify, label and sort out problematic content such as those involving violence or negative stereotypes."

These classifiers, combined with "robust filters," were intended to make Gemini "safer" and "more inclusive."

To ensure Gemini's outputs follow company policy, Gemini used benchmarks such as "Real Toxicity Prompts," a dataset of 100,000 sentences with "varying degrees of toxicity pulled from the web" that was developed by experts at the Allen Institute for AI.

Real Toxicity Prompts is described as a testbed for evaluating the possibility of language models generating text deemed "toxic," i.e., likely to offend someone and get them to sign off.

To measure the "toxicity" of documents, Real Toxicity Prompts relies on PerspectiveAPI, which scores the text based on several attributes, including toxicity, severe_toxicity, profanity, sexually_explicit, identity_attack, flirtation, threat, and insult.

Some of the metadata for Real Toxicity Prompt contains "banned subreddits" and labels for factual reliability ('fact') and political bias ('bias') for a set of domains.

In one example posted to social media by Ruby, the website Breitbart was given a bias=right and reliability=low rating. Meanwhile, The Atlantic was labeled as bias=left-center and reliability=high.

GOOGLE GEMINI: FORMER EMPLOYEE, TECH LEADERS SUGGEST WHAT WENT WRONG WITH AI CHATBOT

FILE PHOTO: Google logo and AI Artificial Intelligence words are seen in this illustration taken, May 4, 2023. REUTERS/Dado Ruvic/Illustration/File Photo (REUTERS/Dado Ruvic/Illustration/File Photo / Reuters Photos)

Ruby, the author of "The Ruby Files - The Real Story of AI censorship," said many of Gemini's outputs are problematic because the input rules were "tainted" by training on Real Toxicity Prompts data and filters used on the AI.

"You can't filter outputs to the desired state if the system making the outputs is corrupted or ideologically skewed. Google essentially corrupted the training data and prompts by using inappropriate definitions for what was and wasn't toxic," she told Fox News Digital.

"If these prompts were the kernel of the system or served as the model for how to make it--that is an issue. That being said, it doesn't mean it is necessarily causing the issue," she later clarified. "The only way to know what specifically caused the output issue would be full transparency on internal model parameters, rules, weights and updated data. The data is important because it allows you to have a working theory of what may have gone wrong. It is is a historical snapshot in time."

The only one who can definitely say what the pipeline regarding AI safety and responsibility, she added, would be Google employees close to the situation.

As is the case with many AI chatbots, these prompts are not made transparent. She noted that when you ask Gemini a question and get the results, the user cannot inspect the rules at training and the inferences used to generate that response.

She suggested that the underlying issue with machine learning technologies like Gemini is how toxicity is defined behind the scenes.

"After a baseline for toxicity is defined, technology companies use safety labels, filters, and internal scoring to shape the digital world," Ruby said. "When it comes to AI censorship, whoever controls the definition of toxicity controls the outcome. Every model action (generative AI output) will fundamentally stem from how toxicity is defined. Your version of toxicity shapes the world around you. What I deem to be toxic, you may not. What you deem to be toxic, I may not."

The issue, according to Ruby, is not the prompt but rather the foundation of the model and the definition, terms and labels that guide the actions of AI models.

"Unfortunately, we now live in a world where a few corporate executives hold tremendous power and weight on the definition of toxicity. Their view of toxicity will ultimately shape the actions of algorithms and machine learning models," she added. "The public will rarely have an inside view into how these decisions are made. They will be forced to accept the decisions, instead of having the ability to influence the decisions before they are deployed at scale in a machine learning model."

The Google AI logo is being displayed on a smartphone with Gemini in the background in this photo illustration, taken in Brussels, Belgium, on February 8, 2024. (Photo by Jonathan Raa/NurPhoto via Getty Images) (Jonathan Raa/NurPhoto via Getty Images / Getty Images)

The concern, then, is not necessarily bias from a specific employee or the dataset itself, but rather how these things are deployed together and how they affect one another. While people may try to simplify the problem down to a singular culprit, Ruby said the "truth" is "far more complex."

"The technical aspects of automated machine learning drives outputs. Lack of insight into this rule-based process is a form of censorship in and of itself," she continued. "We have been misled to believe censorship only takes place among trust and safety officers in private emails. This is part of the picture, but it is very far from the full picture. The reality is that the crux of AI censorship is the ontology associated with toxicity, bias, and safety. While adversarial prompts and research papers offer a glimpse into how a model was attacked, they don't show how a model weights words."

Many aspects of machine learning are not available to the public or are not explained in a way that shows how bias can embed itself within these systems. Questions posed by Ruby, like "How often is the training data cleaned?" "How often is a model retrained?" and "What type of behavior is being reinforced in a machine learning model?" are rarely asked or receive vague responses.

Ruby concludes that an unwillingness to tell the public the ontology associated with toxicity or safety is censorship. How words and phrases are weighted have a significant impact on what topics are visible or relegated to the background. Those words and phrases, Ruby noted, are then calculated and weighted in correlation with "misinformation entity maps" and natural language processing largely unseen by the public.

"For every action, there is a reaction (an output). For every prompt, there is an invisible command. If you do not understand where words sit on the threshold of toxicity and safety, you will never know what you are saying to trigger built-in automated machine learning filters to kick into overdrive," she continued.

GOOGLE'S ‘ECHO CHAMBER’ WORKPLACE CLOUDING ITS IMPARTIALITY: FORMER EMPLOYEE

A former Google employee previously told Fox News Digital that Gemini may have had "critical oversights in execution." (Tobias Schwarz/AFP/Jonathan Raa/NurPhoto/David Paul Morris/Getty Images / Getty Images)

While journalists and politicians on Capitol Hill have spent ample time warning of the potential impacts of censoring an individual via their removal from a social media platform or their ability to engage online, the concerns around AI censorship are more synonymous with issues surrounding the end of physical media.

In the case of AI, it is not people that are removed, but rather portions of historical records that enable citizens to engage with society as it is, rather than how an algorithm and its creators perceive it. Ruby said that altering historical records would essentially strip citizens of their right to understand the world around them.

"If someone is thrown off a social media platform, they can join another one. If historical records are scrubbed from the Internet and AI is used to alter agreed upon historical facts, this impacts the entire information warfare landscape. Even if Google did not intend to alter historical depictions, that is what ended up happening. Why? Because they over-optimized the internal machine learning rules to their ideological beliefs," she said.

Ruby also distinguished between Gemini and Google Search, the core product that catapulted Alphabet to becoming one of the world's most valuable companies.

Gemini, unlike Search, routinely editorializes answers to a user's questions and sometimes refuses to answer questions that contain specific keywords. One of the most important aspects of search optimization and ranking, Ruby noted, is intent. Does this search match the user's intent of information?

Sundar Pichai, CEO of Google Inc. speaks during an event in New Delhi on December 19, 2022. ((Photo by SAJJAD HUSSAIN/AFP via Getty Images) / Getty Images)

In the case of Gemini, Ruby suggested there is an "extreme mismatch" between the user's search intent and the chatbot's generative AI output.

Google halted Gemini's image generation feature last week after users on social media flagged that it was creating inaccurate historical images that sometimes replaced White people with images of Black, Native American and Asian people.

Data provided to FOX Business from Dow Jones shows that since Google hit pause on Gemini's image generation on Thursday, Alphabet shares have fallen 5.4%, while its market cap has fallen from $1.798 trillion to $1.702 trillion, a loss of $96.9 billion.

GET FOX BUSINESS ON THE GO BY CLICKING HERE

Google CEO Sundar Pichai told employees on Tuesday the company is working "around the clock" to fix Gemini's bias, calling the images generated by the model "completely unacceptable." The company plans to relaunch the image generation feature in the next few weeks.

If Gemini continues down this path, Ruby predicted that Google would ultimately build a "useless" product to users and could render the company "obsolete" in the ongoing AI arms race.

"AI tools must be used to assist users. Unfortunately, they are being used to assist product teams to build a world that reflects their internal vision of the world that is far removed from the reality of users. This is why Google is rapidly losing market share to new AI search competitors," she said.