Google will mandate disclosure of digitally altered election ads

Google's requirement that election ads disclose digitally altered content comes amid the rise of AI

What are the dangers of artificial intelligence?

Sen. Mike Braun, R-In., provides insight on growing fears over the technology on The Evening Edit.

Google on Monday announced that it will have a mandatory requirement for advertisers to disclose election ads that use digitally altered content in depictions of real or realistic-looking people or events.

The update to Google's disclosure requirements under its political content policy requires that marketers select a checkbox in the "altered or synthetic content" section of their ad campaign settings.

Google said it will generate an in-ad disclosure for feeds and shorts on mobile phones and in-streams on computers and television. For other formats, the company will require advertisers to be required to provide a "prominent disclosure" that users can notice.

The company added that its "acceptable disclosure language" will vary based on the context of an individual ad.

META ADDING AI DISCLOSURE REQUIREMENT FOR 2024 ELECTION ADS

A view of Google Headquarters in Mountain View, California, United States on March 23, 2024. (Tayfun Coskun/Anadolu via Getty Images / Getty Images)

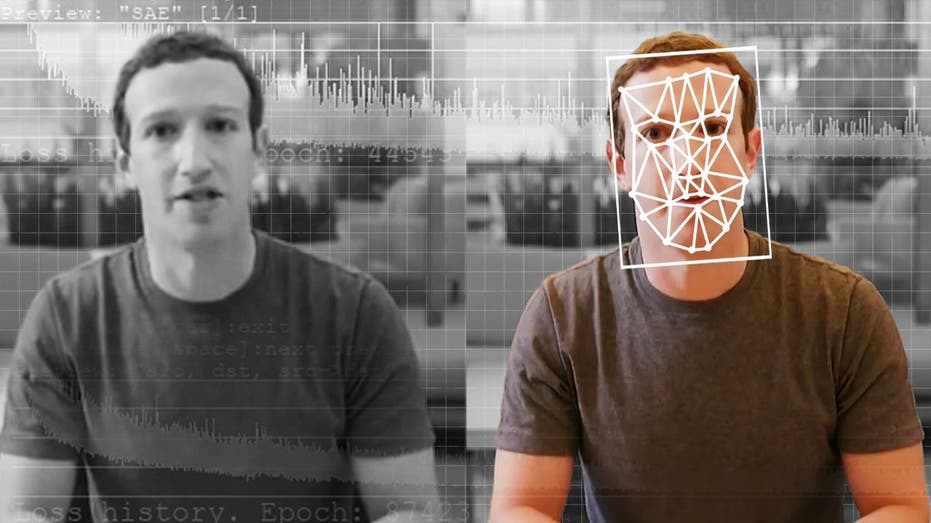

The rapid rise of generative artificial intelligence (AI) that makes it easier to create text, images and video in response to prompts and deepfakes used to misrepresent what a person said or did, has created new challenges for content platforms in their efforts to prevent misuse and the spread of misinformation.

| Ticker | Security | Last | Change | Change % |

|---|---|---|---|---|

| GOOGL | ALPHABET INC. | 302.85 | -0.48 | -0.16% |

During India's general election this spring, fake videos of two Bollywood actors criticizing Prime Minister Narendra Modi went viral. In both ads, AI-generated videos urged voters to support the opposition Congress party.

GOOGLE TO REQUIRE POLITICAL ADS TO DISCLOSE USE OF AI DURING 2024 ELECTION CYCLE

AI technology can be used in voice cloning which can make deepfakes and digitally altered content seem more realistic. (CHRIS DELMAS/AFP via Getty Images / Getty Images)

Separately, OpenAI said in May that it disrupted five covert influence operations that were attempting to use its AI models for "deceptive activity" across the internet as part of an "attempt to manipulate public opinion or influence political outcomes."

Meta, the parent company of Facebook and Instagram, announced last year that it would require advertisers to disclose whether AI or other digital tools are being used to alter or create political, social or election-related advertisements on the social media platforms.

A comparison of an original and deepfake video of Facebook CEO Mark Zuckerberg. - The rise of AI has made it easier to create deepfakes. (Elyse Samuels/The Washington Post via Getty Images / Getty Images)

GET FOX BUSINESS ON THE GO BY CLICKING HERE

Reuters contributed to this report.