Facebook says report finding extremism on platform during 2020 election 'distorts' efforts to combat misinformation ahead of hearing

118 out of 267 groups promoting 'violence-glorifying content' were still active as of last week

Facebook says the details of a new report that found 267 groups were promoting "violence-glorifying content" during the 2020 election "distorts" its efforts to keep the platform safe and clean of misinformation.

Researchers for the nonprofit activist organization Avaaz found that out of the groups with more than 32 million collective followers, 68.7% had names related to the far-right Boogaloo group, the QAnon conspiracy group or militias over the eight months before the US elections, according to the March 18 report.

| Ticker | Security | Last | Change | Change % |

|---|---|---|---|---|

| FB | PROSHARES TRUST S&P 500 DYNAMIC BUFFER ETF | 42.42 | +0.35 | +0.84% |

"This report distorts the serious work we’ve been doing to fight violent extremism and misinformation on our platform. Avaaz uses a flawed methodology to make people think that just because a Page shares a piece of fact-checked content, all the content on that Page is problematic," a Facebook spokesperson said.

Of the 267 groups Avaaz identified, 118 were still active as of last week under Facebook's enforcement policies. Facebook reviewed those 118 groups and removed 18 after finding that they violated the company's policies.

The findings come just days before Facebook CEO Mark Zuckerberg, along with Twitter CEO Jack Dorsey and Google CEO Sundar Pichai, testify before the House Energy and Commerce Committee on Thursday in a hearing titled, "Disinformation Nation: Social Media's Role In Promoting Extremism and Minsinfmoration."

FACEBOOK SAYS FEDERAL, STATE LAWSUITS NOT CREDIBLE, FILES MOTION TO DISMISS

| Ticker | Security | Last | Change | Change % |

|---|---|---|---|---|

| GOOGL | ALPHABET INC. | 322.86 | -8.39 | -2.53% |

| TWTR | NO DATA AVAILABLE | - | - | - |

Facebook announced new measures to combat content linked to QAnon theories and militarized social movements in October.

The company told Fox Business in January that it had more than 78,000 profiles on both Facebook and Instagram that violated its policies against posting content related to QAnon and militia groups between August 2020 and Jan. 12, 2021.

GOP PUSHES BILLS TO ALLOW SOCIAL MEDIA 'CENSORSHIP' LAWSUITS

The company, which owns Instagram, also said it removed more than 37,000 Facebook pages, groups and events related to QAnon and militarized social movements.

Facebook has banned more than 900 militarized social movements since the summer.

QAnon is the conspiracy theory surrounding an anonymous internet persona named "Q" who claims President Trump is taking part in a secret battle against a satanic child sex trafficking ring, cannibals and the "deep state." It began on the 4chan web forum but found more attention on Facebook.

CONSERVATIVE ATTORNEY GENERALS CALL FOR PROBE OF FACEBOOK, TWITTER, GOOGLE

"We’ve also removed millions of pieces of content for violating our policies against COVID and vaccine misinformation and remove[d] voter interference from our apps," the spokesperson said. "Our enforcement isn’t perfect, which is why we’re always improving it while also working with outside experts to make sure that our policies remain in the right place."

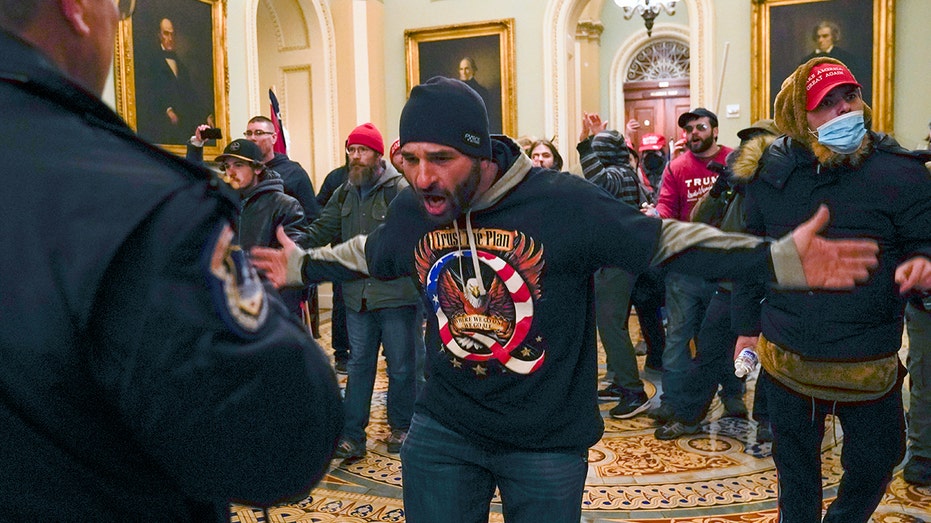

The hallway outside of the Senate chamber at the Capitol in Washington, Wednesday, Jan. 6, 2021. (AP Photo/Manuel Balce Ceneta)

Avaaz is calling on President Biden and Congress to "urgently work together to regulate tech platforms" and implement policies requiring prominent tech platforms to publish transparency reports on misinformation; update algorithms to downrank hateful or misleading content in users' News Feeds; require platforms to issue a "retroactive correction to independently fact-checked" misinformation to every user who shared it; and reform Section 230 of the Communications Decency Act to "eliminate any barriers to regulation requiring platforms to address" misinformation.

GET FOX BUSINESS ON THE GO BY CLICKING HERE

Lawmakers have pointed to social media platforms like Facebook, Twitter and smaller websites like Parler, which is no longer supported by Amazon, as places where incitements of violence and radicalization can gain traction, especially now that people spend more time away from others and seek engagement online amid the pandemic.

Criticism of Big Tech's role in the incitement of violence surfaced largely after the Jan. 6 Capitol riots. Many of those rioters whom authorities arrested after the Jan. 6 events had discussed their intentions on social media; in some cases, those communications helped lead to their arrests.

Other tech giants including Twitter and Google's YouTube have been cracking down on QAnon-related and other radical content.