Former Google CEO commits $125M to launch AI initiative

Eric Schmidt and his wife, Wendy, have launched a project aimed at advancing the technology and preparing for the 'unintended consequences' that could come with it

Henry Kissinger on China threat, artificial intelligence

Former U.S. secretary of state, former White House national security adviser and author Henry Kissinger provides insight into China's threat, U.S. national security and his new book.

Former Google CEO Eric Schmidt and his wife, Wendy, announced Wednesday they are launching an initiative aimed at advancing artificial intelligence and preparing for the "unintended consequences" the technology could present as it evolves, committing $125 million over the next five years to the effort.

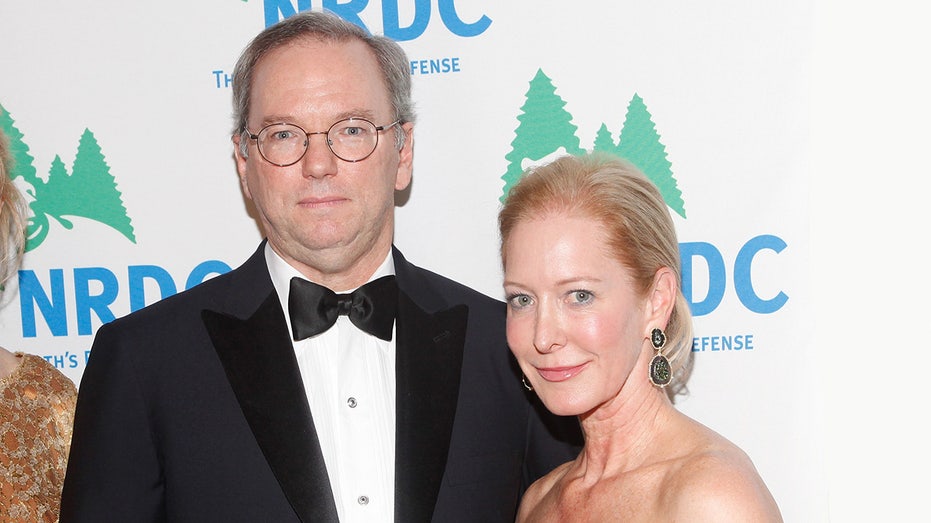

(L-R) Then-Chairman of Google Eric Schmidt and his wife Wendy Schmidt attend NRDC's 13th Annual 'Forces For Nature' Benefit at American Museum of Natural History on November 14, 2011, in New York City. (Photo by Amy Sussman/Getty Images for NRDC / Getty Images)

The project, dubbed AI2050, is being launched through the couple's philanthropic organization Schmidt Futures, which funds ventures that serve a purpose for advancing society.

"AI will cause us to rethink what it means to be human," Mr. Schmidt said in a statement. "As we chart a path forward to a future with AI, we need to prepare for the unintended consequences that might come along with doing so."

FACEBOOK SAYS AI WILL CLEAN UP THE PLATFORM, BUT ITS OWN ENGINEERS HAVE DOUBTS

"In the early days of the internet and social media, no one thought these platforms would be used to disrupt elections or to shape every aspect of our lives, opinions and actions," said Schmidt, who also chaired the U.S. National Commission on Artificial Intelligence from 2018 to 2021.

Eric Schmidt speaks during a National Security Commission on Artificial Intelligence (NSCAI) conference November 5, 2019 in Washington, D.C. (Photo by Alex Wong/Getty Images / Getty Images)

"Lessons like these make it even more urgent to be prepared moving forward," he said, adding, "Artificial intelligence can be a massive force for good in society, but now is the time to ensure that the AI we build has human interests at its core."

The AI2050 project will be co-chaired by Schmidt and James Manyika, Google's head of technology and society, who has been an unpaid adviser to Schmidt Futures since 2019.

GOOGLE PLANS TO CURTAIL CROSS-APP TRACKING ON ANDROID PHONES

Manyika has compiled a working list of "hard problems" the initiative aims to tackle.

"Through our conversations and the extensive research that has been published by many academics there are several themes that have emerged," Manyika told FOX Business in an email.

James Manyika, senior partner at McKinsey & Co. and Senior Vice President of Technology and Society at Google speaks during the Bridge Forum in San Francisco, California, U.S., on Tuesday, April 16, 2019. (Photographer: David Paul Morris/Bloomberg via Getty Images / Getty Images)

| Ticker | Security | Last | Change | Change % |

|---|---|---|---|---|

| GOOG | ALPHABET INC. | 323.10 | -8.23 | -2.48% |

GET FOX BUSINESS ON THE GO BY CLICKING HERE

"What we aim to do is simultaneously maximize the upside potential, while mitigating the downside risks," he explained. "Paramount to both of these goals is developing more capable and more general AI that is safe and earns public trust, making sure AI performs technically well and does not harm people, and that AI systems which are developed remain aligned and compatible with the way humans designed them."

Manyika added, "Some specific examples of things we need to get right include intelligibility and explainability, bias and fairness, toxicity of outputs, goal misspecification, provably beneficial systems, and human compatibility."