Pentagon turns to AI to help detect deepfakes

DeepMedia's AI technologies are being used by the DOD to detect deepfakes

Zscalers Jay Chaudhry gives chilling example of an AI deepfake

Zscaler CEO Jay Chaudhry details how hackers tried to impersonate him by using A.I. voice cloning to run a scam on The Claman Countdown.

The Dept. of Defense is using artificial intelligence (AI) to help service members detect deepfakes that could pose a threat to national security.

Generative AI technologies have made it easier than ever to create realistic deepfakes, which are designed to imitate the voice and image of a real person to fool an unwitting viewer. In the context of national security, deepfakes could be used to dupe military or intelligence personnel into divulging sensitive information to an adversary of the U.S. posing as a trusted colleague.

The Pentagon recently awarded a contract to Silicon Valley-based startup DeepMedia to leverage its AI-informed deepfake detection technologies. The contract award’s description states that the company is to provide, "Rapid and accurate deepfake detection to counter Russian and Chinese information warfare."

"We’ve been contracted to build machine learning algorithms that are able to detect synthetically generated or modified faces or voices across every major language, across races, ages and genders, and develop that AI into a platform that can be integrated into the DOD at large," said Rijul Gupta, CEO and co-founder of DeepMedia.

GOOGLE CEO SOUNDS ALARM ON AI DEEPFAKE VIDEOS

The Pentagon is utilizing AI tech to help counter deepfakes. (AP Photo/Patrick Semansky / AP Newsroom)

"There are also threats to individual members of the Department of Defense, enlisted and officers, from the highest or lowest level could be deepfaked with their face or voice and that could be used to acquire top secret information by our adversaries internationally," Gupta said.

Generative AI and large language models help power DeepMedia’s deepfake detection systems.

"Our AI can actually take an audio or video, extract the faces and voices from that piece of content automatically and then run the face or the voice or both through our detection algorithms," he said. "Our detection algorithms have been trained on millions of both real and fake samples across 50 different languages and have been able to determine with 99.5% accuracy whether something is real or whether it has been manipulated using an AI in some way."

HOW AI HAS SHAPED A VITAL NATO ALLY’S PRESIDENTIAL ELECTION

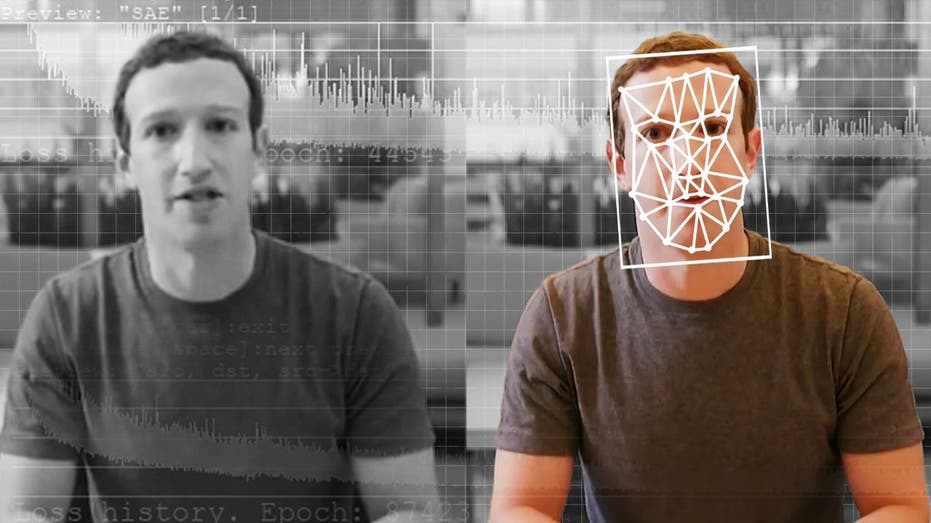

Images and videos from the internet can be used to create deepfakes. (Reuters TV / Reuters / Reuters Photos)

"When it determines that a piece of content has been manipulated, it then highlights and alerts a Department of Defense user to this fact and will pinpoint exactly what part of the audio or video has been manipulated, the intent behind that manipulation, and what algorithm was used to create that manipulation so then the analysts at the Department of Defense can escalate that to the appropriate person on their end," Gupta explained.

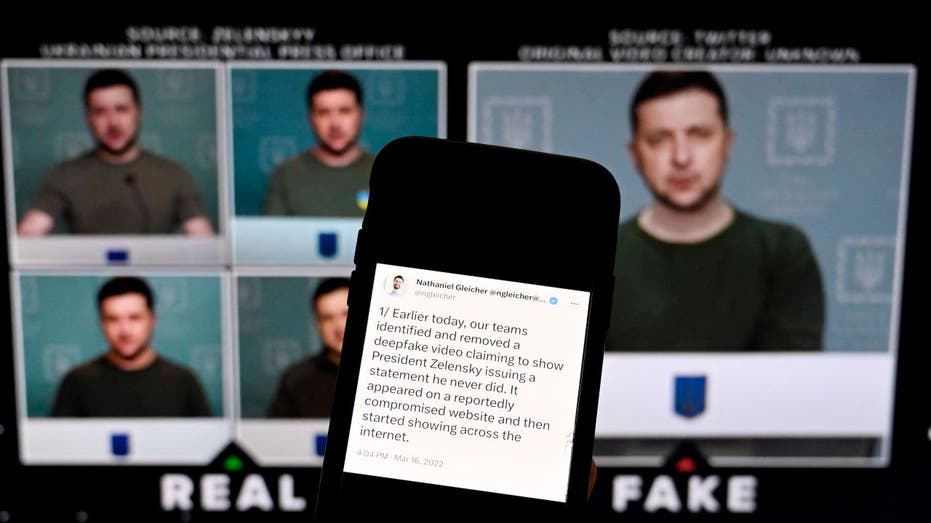

DeepMedia co-founder and COO Emma Brown noted that Russia’s war against Ukraine provided a key example of the importance of multilingual deepfake detection. "Last year there was a deepfake of President Zelensky at the beginning of the war in Ukraine and they had him laying down arms, and the only way that was able to be detected at that point was because linguists knew the accent was wrong. And so those are the types of moments that we’re able to automate within our system because of our generative technology."

GENERATIVE AI TOOLS LEAD TO RISING DEEPFAKE FRAUD

Deepfakes have been used to sow confusion about world leaders, including Ukrainian President Volodymyr Zelensky. (Photo by OLIVIER DOULIERY/AFP via Getty Images)

The DOD has previously contracted with DeepMedia on creating a universal translator platform to "accelerate and enhance language translation among allies." That use case for the company’s technology has drawn the attention of the United Nations, which Gupta said is leveraging DeepMedia’s generative AI capabilities to "promote automatic translation and vocal synthesis across major world languages" – a concept he calls "deepfakes for good."

"The more people use that generative product, the more data we get from both the real and the fake side, which makes our detection better," he added. "Our detectors are powered on the generative side, and the better our detectors get, the better our generators get as well."

Deepfakes can be created with images and only require about 15 seconds of audio to clone someone's voice. (Elyse Samuels/The Washington Post via Getty Images / Getty Images)

GET FOX BUSINESS ON THE GO BY CLICKING HERE

DeepMedia was also recently invited by the government of Japan to discuss ethical AI and the regulation of AI technologies. The company said it discussed the integration of its DubSync technology into Japanese government systems to enable real-time communication with military allies, as well as DeepMedia’s DeepID platform to fully authenticate communications and thwart potential deepfake attacks by adversaries.