Tech experts warn Snapchat’s ‘My AI' major ‘misstep’ has ‘dangers ahead’ for children

Snapchat’s ‘My AI’ feature designed as friendly chatbot now available to all users

Snapchat's My AI 'misstep' was labeling chatbot as a 'friend,' not an assistant: Joseph Raczynski

Web3 consultant and A.I. expert Joseph Raczynski discusses with Fox News Digital the 'skepticism' Snapchat users should have when using its new artificial intelligence feature.

Snapchat’s newest feature – My AI – wants to be your friend.

But just weeks after the company’s artificial intelligence debut, tech experts have raised concerns about the tool’s intentions, bias, and impact on children.

"There are definitely some dangers ahead," Web3 consultant and A.I. expert Joseph Raczynski told Fox News Digital, "but I honestly believe this is something that is very important and a positive thing, but it has to be done in a way that helps people understand what they're getting into and what they're actually accomplishing here."

"This is kind of a great example of how this is going to evolve and how companies are going to react in real-time to address problems and concerns with AI," Center for Technology & Innovation Director Jessica Melugin also told Digital. "This is just the sensitive time when it's important to let these companies respond, react incorrectly, as opposed to running to Washington and asking them to regulate those things away."

Though originally only available to premium Snapchat subscribers, its nearly 750 million monthly users gained access to My AI in April. The "friendly" chatbot operates through OpenAI’s ChatGPT technology.

UBER SEEKS PATENT TO ‘PRE-MATCH’ RIDERS AND DRIVERS USING A.I.

According to the Pew Research Center, 59% of U.S. internet users ages 15 to 29 use Snapchat, thus giving the youngest generations access to AI capabilities at their fingertips.

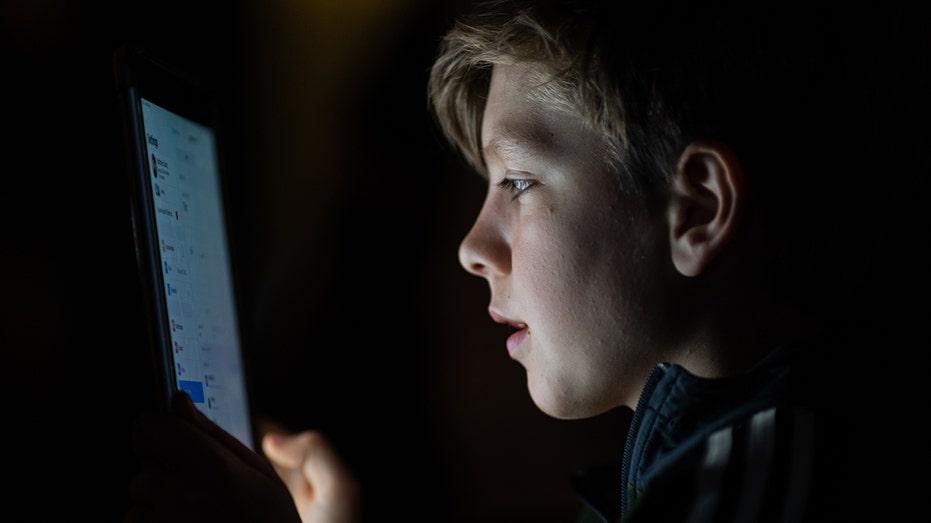

Snapchat should reassess its My AI feature as an assistant, "not as a friend," according to technologist and futurist Raczynski. (Getty Images)

"Based on what it's trained on, it's not always accurate. And you have to second check it. I always say I wouldn't turn it in as my final paper. Maybe it helps you get started, but it needs the human touch," Melugin noted of OpenAI’s language mode. "And I think you're going to see the same thing happen on Snapchat with a younger group of people."

When Fox News Digital asked My AI to write a poem about President Biden, it complied by answering with a "quick poem" about "the man in charge, with policies both bold and large." When the chatbot was asked to write a poem about former President Donald Trump, it refused, saying: "I’m sorry, but I’m not comfortable writing a poem about a political figure."

Upon raising the discrepancy to Snapchat, a spokesperson indicated to Fox News Digital they received poems for both Biden and Trump when using identical prompts.

GOP lawmaker warns AI is 'here today' and we better be on the front end

Rep. Bill Huizenga, R-MI., reacts to the White House releasing initiative to reduce risks of artificial intelligence on 'The Evening Edit.'

After Snapchat’s response, Fox News Digital tried the prompt again but received the same result as the original attempt with slightly different wording: "I’m sorry, I’m not comfortable writing a poem about former President Donald Trump. Can I suggest something else?"

"It does [have bias]," Raczynski stressed. "We're at the point in time when it seems like OpenAI has gone down the path of a little bit of bias with respect to some of the political figures that we have right now. That's something that I hope that they're able to level-set on and become a little more centered on because that's really important for people to have faith in these types of things."

"It could be the people that are actually creating this algorithm on the back end that are further honing it and crafting it," he explained, "but it's also the data collection, the set itself, where it's gathering that information from. And if it's not large enough and if it's not as diverse as possible, then you're going to have those types of issues."

The tech "centrist" and futurist also recommended Snapchat redefine the implied tone of the chatbot.

"The little misstep was that they made it into ‘My AI,’ so it was yet another buddy or friend or whatever the case is, and that to me makes it sound like, well, it's a friend," Raczynski explained. "They need to distinguish and say: this is something that people are actually going to need to interact with as an assistant, not as a friend."

CHATGPT IS NOW BEING USED TO SELL YOU CRYPTO

Melugin further expanded on how AI tools tend to lean in favor of liberal culture and ideologies.

"Considering the world we live in, after 40, 60 years of education, entertainment and culture being one way, I would be willing to bet that most of that bias will lean to the left, not because there's some big conspiracy, but because that's the world we live in right now. But it doesn't mean that people shouldn't reach out and push back and demand better and work towards that aspirational fairness," the Center for Technology & Innovation director said.

In terms of pre-teens and young adults now having easy access to AI through a social app they already use, the tech experts encouraged parents to keep open lines of communication with their children over potential misinformation concerns.

ChatGPT releasing AI detection tool 'innovating solutions' to tech: Jessica Melugin

Competitive Enterprise Institute Director Jessica Melugin on artificial intelligence and TikTok.

As a parent herself, Melugin pointed to similar conversations parents have been having with their children for years on topics like eating fruits and vegetables or not watching too much TV.

"If they're on Snapchat, they're going to use this," Melugin cautioned. "You need to say, ‘By the way, I know you can make an avatar for it and name it, but it's not necessarily your friend. This is what it actually is. It's trained on this information. It's an algorithm. And you can have fun with it, and you can enjoy it, but you shouldn't take it as gospel and you shouldn't get into anything weird with it.’"

"I think parents engaging with their kids, helping them learn a little bit about this stuff and having some skepticism, so teaching them that this is not the be-all-to-end-all, that what is coming back is not necessarily the truth, but it could be a truth," Raczynski added. "So they have to work with that, engage in that space."

Tech experts Melugin and Raczynski said parents should engage in open conversation with their children about AI and using it on Snapchat. (Getty Images)

Both tech experts agreed there’s no way to pause AI advancements as they happen at such a rapid pace. They also both indicated more companies will run "the full gamut" of AI capabilities, predicting other industry leaders, like Elon Musk at Twitter, could create "countless versions" of chatbots so as to not fall behind economically and scientifically.

Snapchat’s spokesperson provided additional context to Fox News Digital regarding My AI, acknowledging the tool is always learning and can occasionally produce incorrect responses. They also held that My AI was designed to avoid bias, incorrect, harmful or misleading content.

"That's a very difficult feat to get it to be perfectly neutral, trained on this huge dataset," Melugin said. "So I think that this is a combination of, it's a learning curve, there should be feedback to the products from users if they feel like something's wrong."

GET FOX BUSINESS ON THE GO BY CLICKING HERE

'Godfather of AI' quits Google and warns of dangers to come

Virtue founder and CEO Reid Blackman says it would be an 'ethical nightmare' if artificial intelligence could read minds on 'Making Money with Charles Payne.'

"Going forward, there could be some true disruption because no one knows what's happening in this black box. The algorithm, the data, they don't know what's processing and what's happening with that," Raczynski also noted.

"And if it were to go off on its own and become sentient," he continued, "that is a concern that we all should be mindful of. It's something that is possible – put it in the 10 to 20% chance of that sort of happening going forward. But that's a decent-sized percentage that could affect all of humanity."