Fake ChatGPT, Bard ads con Facebook users: report

Ads have reportedly been running on Facebook for weeks

Microsoft's ChatGPT investment is key to the AI boom: Lou Basenese

Public Ventures President Lou Basenese argues Microsoft's $10 billion investment could impact how fast artificial intelligence becomes mainstream.

Fake ChatGPT and Bard ads are reportedly spreading dangerous malware.

The ads have been running on social media platform Facebook for weeks, according to a report in The Washington Post, with software claiming to offer a timesaving way to send questions to the artificial intelligence tools.

Instead, the bad actors steal victims' online accounts and the issue appears to extend to other sites. The hackers sometimes just steal the cookies and sell them, or they might run scams using accounts, the Post reported.

OPENAI WILL PAY USERS UP TO $20,000 TO SPOT VULNERABILITIES IN CHATGPT

The ChatGPT website displayed on a laptop screen in is seen in this illustration photo taken in Krakow, Poland on April 11, 2023. ((Photo by Jakub Porzycki/NurPhoto via Getty Images) / Getty Images)

OpenAI said the ads were not from the company, according to the Post. OpenAI did not immediately return FOX Business' requests for comment.

Google confirmed that the ads were not official Bard ads and that it was looking into the matter.

The Post also highlighted that details of malware distribution have repeatedly altered, suggesting that the attackers are adapted and implemented countermeasures.

A quick search for the keyword the report shared on Facebook, "password 888," revealed more than 30 results in April.

Some of those ads started running in March, and a Meta spokesperson told the Post that bad actors frequently evade detection but that the social media giant tries to disrupt threats and uses automated systems with similar uses.

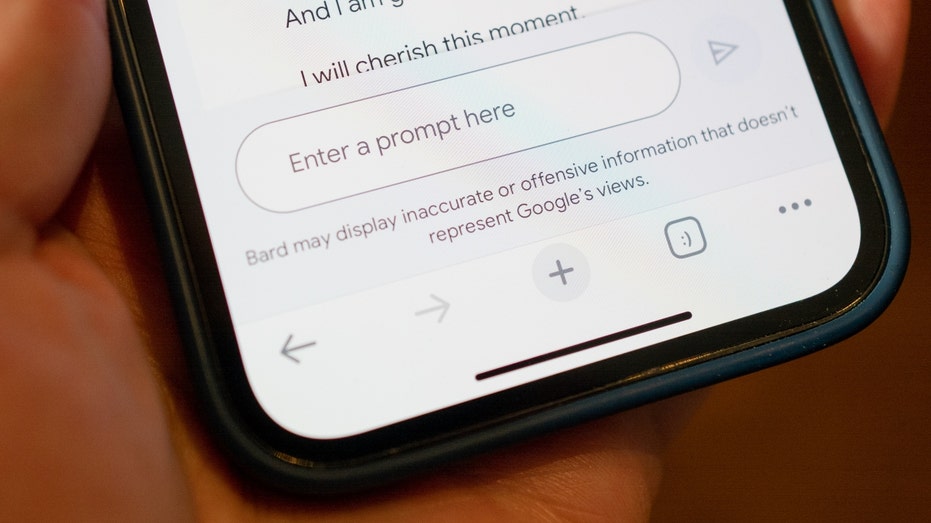

A person holds an iPhone using the Google Bard generative A.I. language model (chatbot) with prompt entry field, Lafayette, California, March 22, 2023. ((Photo by Smith Collection/Gado/Getty Images) / Getty Images)

Facebook has taken down some of those ads.

Gabby Curtis, a Meta spokesperson, said bad actors rely on a diverse set of lures to disguise malware and that bad actors are rapidly changing deceptive tactics. They host malware on file-sharing services to avoid detection, and they hide links through cloaking to circumvent automated review systems, using popular marketing tools including link shorteners.

They target many platforms, including file-sharing services – activity Meta works to detect, report and enforce.

CLICK HERE TO READ MORE ON FOX BUSINESS

It encourages users to be cautious regarding what software to install and advises them to uninstall any software that seems to trigger suspicious activity.

All of this comes as emerging artificial intelligence technology is being weighed on an international scale.

Federal regulators in the U.S. have asked the public for input on policies that would hold such systems accountable and help to manage associated risks.

Symbolic photo: The Facebook app is displayed on a smartphone on April 3, 2023, in Berlin, Germany. ((Photo by Thomas Trutschel/Photothek via Getty Images) / Getty Images)

"Responsible AI systems could bring enormous benefits, but only if we address their potential consequences and harms. For these systems to reach their full potential, companies and consumers need to be able to trust them," Alan Davidson, the National Telecommunications and Information Administration administrator, said Tuesday.

GET FOX BUSINESS ON THE GO BY CLICKING HERE

This request follows an open letter signed by billionaire Elon Musk, Apple co-founder Steve Wozniak and others calling for a moratorium on "giant A.I. experiments," citing potential risks to society.

Fox News' Chris Pandolfo contributed to this report.