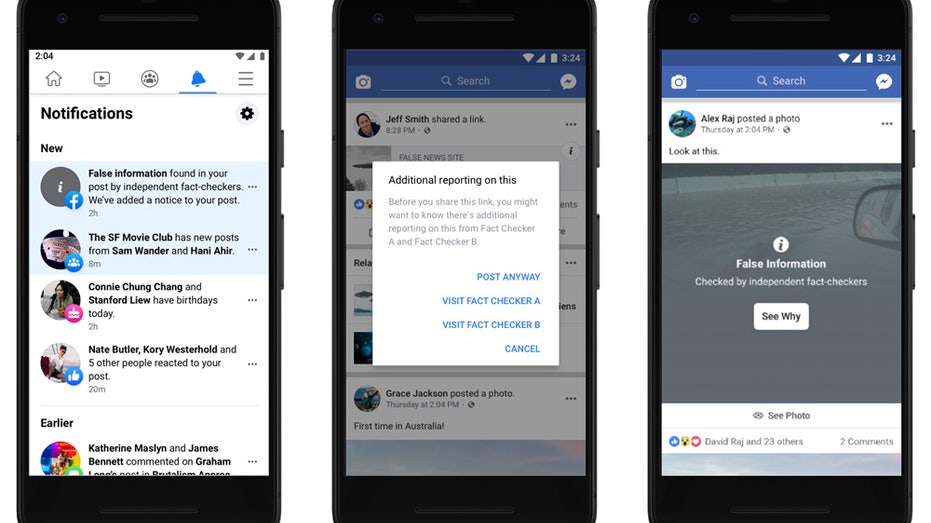

How Facebook fact checks work

Facebook has come under fire for not fact-checking President Trump's posts

Facebook and its founder, Mark Zuckerberg, have stated that the website should not be the arbiter of truth.

| Ticker | Security | Last | Change | Change % |

|---|---|---|---|---|

| FB | PROSHARES TRUST S&P 500 DYNAMIC BUFFER ETF | 42.42 | +0.35 | +0.84% |

Since social media websites are expected to find and label or remove false information that their users post, the platform has partnered with independent, third-party fact-checkers to get the job done rather than doing the fact-checking themselves.

FACEBOOK IS CONSIDERING A BAN ON POLITICAL ADS: REPORT

The tech giant uses algorithms and human employees to identify false information. Users can flag false information that Facebook employees review, or the site's machine learning technology will pick up on content with a high volume of comments from people "saying that they don’t believe a certain post," according to the site.

Then, the company's independent, third-party fact-checkers review that information and determine how accurate it is. Facebook partners with more than 60 global fact-checking organizations that moderate content in 50 languages.

Facebook fact checks (Facebook.com)

After receiving ratings from fact-checkers, Facebook will limit the visibility of such information based on its rating and the subject of the information and label the misleading posts. Ratings include: "false," "partly false," "false headline" and "true" if fact-checkers determine a flagged post to be, in fact, correct.

Once a post is labeled, Facebook will include a link for users to click that will give them more information on the subject that fact-checkers reviewed in labeling the post.

TWITCH SUSPENDS TRUMP FOR 'HATEFUL CONDUCT' VIOLATIONS

There have been a number of instances in which Facebook users and publishers disagree with Facebook fact-checkers' decisions. Publishers can dispute ratings or make corrections by contacting fact-checkers directly.

"Fact-checking partners are ultimately responsible for deciding whether to update a rating, which will lift enforcement on the content, Page or domain," a FAQ about the site's fact-checking program reads.

Facebook sign-in page on a smartphone and computer. (iStock)

Facebook also removes some misinformation posted to the site.

"When it comes to fighting false news, one of the most effective approaches is removing the economic incentives for traffickers of misinformation. We've found that a lot of fake news is financially motivated," a blog post from the company reads.

GET FOX BUSINESS ON THE GO BY CLICKING HERE

When certain pages or websites repeatedly share information that is determined to be misinformation, Facebook will monitor those sources more closely and limit their visibility, according to the platform.

The social media site recently came under fire for not fact-checking posts from President Trump after Twitter added labels to the same posts. Facebook has since updated some of its policies, saying in a June 26 blog post that it will flag content it previously wouldn't have if it was deemed "newsworthy," including posts from politicians.

The tech giant said in a May 12 update regarding its coronavirus-related misinformation policies that it put warning labels on more than 50 million pieces of content related to the pandemic "based on around 7,500 articles by [its] independent fact-checking partners."